How VM data is managed within an HPE SimpliVity cluster, Part 1: Data creation and storage

By Damian Erangey, Senior Systems/Software Engineer, HPE Software-Defined and Cloud Group

This blog is the first in a 5-part series on VM data storage and management.

Speaking with a customer recently I was asked how data, that makes up the contents of a virtual machine, is stored within an HPE SimpliVity cluster, and how this data affects overall available capacity within that cluster.

This is essentially a two part question, and the first part is relatively straightforward. However, answering the second part of the question leads to the time old response “it depends!” Realistically it can only be answered by digging a little deeper into a customer’s specific environment and ensuring they have a good grasp of the HPE SimpliVity hyperconverged architecture.With that in mind, having an understanding of the architecture is beneficial for any existing or perspective HPE SimpliVity customer. And so with that in mind, I have decided to set out a 5 part series explaining how virtual machine data is stored and managed within a HPE SimpliVity Cluster and how this will lead to a better understanding of data consumption and management techniques within that cluster.

The aim of these posts is to provide a deeper view of HPE SimpliVity architecture and to set straight some of the misconceptions in relation to the underlying architecture. The series will be split into the following topics:

- Part 1: The data creation process: How data is stored within an HPE SimpliVity cluster

- Parts 2 & 3: How the HPE SimpliVity platform automatically manages this data

- Part 4: How as administrators we can manage this data

- Part 5: Understanding and reporting managing capacity

The aim is to allow you to develop an understanding of the architecture and apply this knowledge gained to your own environment.

Parts of these posts will be re-hashed content from my personal blog posts damianerangey.com and at times there may be more detailed information contained in those posts. If that is the case I will reference where to find further reading.

The data creation process: How data is stored within an HPE SimpliVity cluster

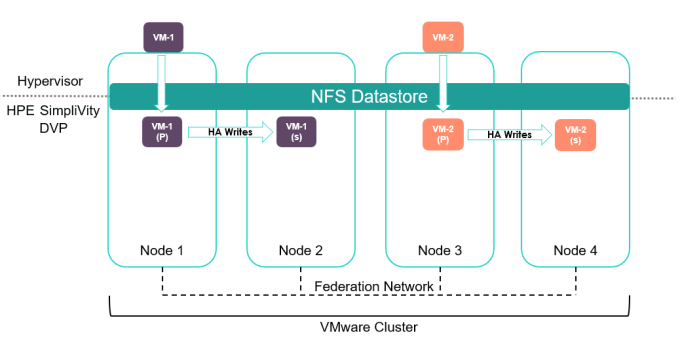

Data associated with a VM is not spread across all hosts within a cluster, rather data associated with a VM is stored in HA pairs on two nodes within the overall cluster.

Looking at the above diagram of a 4-node HPE SimpliVity cluster, from a VMware perspective all nodes are accessing a single shared SimpliVity datastore.

Think of the NFS datastore as the entry point into the HPE SimpliVity file system. The DVP (Data Virtualization Platform) presents a simple root level file system whose contents are the directories within the NFS datastore. The DVP maps each datastore operation into an HPE SimpliVity operation, i.e., read/write, make a directory, remove a directory etc.

Each time a new VM is created or moved to an HPE SimpliVity datastore, a new folder will be created on the NFS datastore containing all the files associated with that virtual machine. Two copies of the virtual machine are automatically created within the cluster regardless of the number of nodes within that cluster (except for a single node cluster). The redundancy of all HPE SimpliVity virtual machines are N+1, excluding backups. We call these copies data containers.

Space utilized to store a virtual machine is already consumed within the cluster from its initial creation of a virtual machine (both for its primary and secondary copy). This value may increase or decrease depending on de-duplication and compression during the life of the VM.

What is an HPE SimpliVity Data Container?

An HPE SimpliVity data container contains the entire contents of a VM. Various services within the DVP are responsible for ownership and activation of this data container along with making it highly available.

The data container is its own file system. The DVP organizes its metadata, directories and files within those directories as a hash tree of SHA-1 hashes. It processes all typical file system operations along with read and write operations.

Unlike traditional file systems that map file offset ranges to a set of disk LBA ranges, the HPE SimpliVity DVP relies on the SHA-1 hash (signature) of any data that it references. Data is divided into 8K objects by the DVP and these objects are managed by the object store.

Placement of Data Containers

The HPE SimpliVity Intelligent Workload Optimizer (or IWO for short) will take a multidimensional approach on which nodes to place the primary and secondary data containers, based on CPU, memory, and storage metrics. In our simplified diagram, IWO choose VM-1’s data container to be located on node 1 and 2 and VM-2’s data containers to be located on nodes 3 and 4 respectively.

IWO’s aim is to balance workloads across nodes, to try to avoid costly migration of a data container in the future.

Primary and Secondary Data Containers

One SimpliVity data container will be chosen as the primary (or active) copy of the data (actively serving all I/O) while a secondary copy is kept in HA sync at all times. HA writes occur over the 10 Gb federation network between nodes.

IWO will always enforce data locality, thus pinning (through DRS affinity rules) a virtual machine to the two nodes that contain a copy of the data. This is also handled at initial virtual machine creation.

In the first diagram VM-1 was placed on Node 1 via IWO, thus the data contained on node 1 is the primary (or active) copy of data.

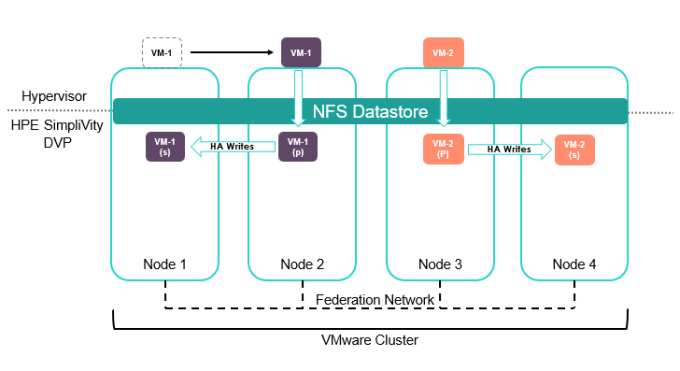

In the below diagram, VM-1 has been migrated to node 2. As a result the secondary (or standby) data container is automatically activated to become the primary, and the data container on Node 1 is set to secondary. HA writes are the reversed. Virtual machines are unaware of this whole process.

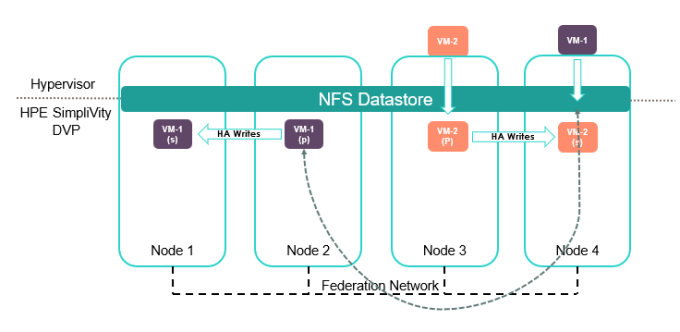

It is possible and supported to run virtual machines on nodes that do not contain primary or secondary copies of the data. In this scenario I/O travels from the node over the federation network back to where the primary data container is located.

Now that you have a greater understanding of how virtual machine data is stored, we can take a look at how the DVP automatically manages this data, and as administrators, how we can manage this data as the environment grows.

In the next post I will explore the mechanisms of automatic management of data through the HPE SimpliVity IWO mechanism.

More information about the HPE SimpliVity hyperconverged platform is available online:

- HPE SimpliVity Data Virtualization Platform provides a high level overview of the architecture

- You’ll find a detailed description of HPE SimpliVity features such as IWO in this technical whitepaper: HPE SimpliVity Hyperconverged Infrastructure for VMware vSphere

![picture2-1555105142615[1] picture2-1555105142615[1]](https://builddaylive.com/wp-content/uploads/2019/05/picture2-15551051426151-1024x255-394x330.png)