Data Protection with HPE SimpliVity, Part 2: The best disaster is one that never happens

As explained in the first blog in this series, HPE SimpliVity is designed from the ground up for simplified global management, efficiency of data, and easy data protection. And while no system on the planet can prevent a disaster, IT infrastructures can be engineered to minimize the impact to business. Since protecting data is a multidimensional problem, it is important to make sure your data is protected across a wide range of different risks. Ideally, any issues with the availability of the environment should be handled gracefully and automatically to either avoid or minimize downtime and human intervention.

You cannot prevent disaster, but you can prepare

Creating backups of data is great for capturing data in a single point in time for later recovery, but ensuring the availability of the data means that the hardware that stores and provides access to the data needs to be as resilient to failure as possible. HPE SimpliVity 380 and 2600 platforms are built on the HPE ProLiant DL380 and HPE Apollo 2000 hardware platforms respectively, and those platforms are designed and tested for the utmost resiliency. HPE hardware features such as certified RAM, redundant power and cooling, and hardware-level security that ensures the integrity of BIOS and firmware, make for the start of a highly resilient hyperconverged node.

Data integrity is improved by only acknowledging writes when the data is properly persisted, and by performing regular integrity checks of the data after it is written to disk. Data can be persisted directly to disk on the HPE SimpliVity 2600 or to the flash and super capacitor protected RAM on the HPE OmniStack Accelerator Card in the HPE SimpliVity 380. Once the data is persisted to local storage, it is protected from disk loss by utilizing hardware RAID.

HCI technology built to withstand data center disasters

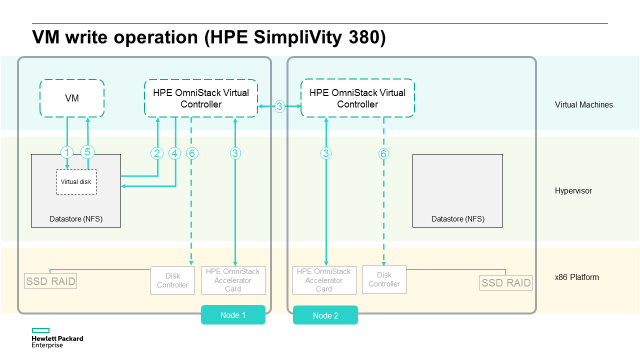

In spite of the careful planning and engineering that goes into resilient systems, hyperconverged infrastructure (HCI) nodes do fail. In order to protect against this eventuality, every VM and backup within an HPE SimpliVity cluster has two complete copies stored on two nodes within the cluster. As this illustration shows, HPE SimpliVity mirrors every write from the primary node to the secondary node immediately upon accepting the write from the VM. This occurs inline before deduplication. All data processing then occurs independently on each node with the write acknowledgment to the VM only occurring after both nodes have successfully persisted the write.

If the primary storage node for a VM fails, all reads and writes simply failover to the secondary node for the VM’s storage. If the VM was not affected by the failure – for example, it was running on a compute node – the failover will occur transparently and the applications running inside the VM will not notice. If the VM failed with the storage, then a hypervisor VM restart on a healthy node will occur, if properly configured within vSphere or SCVMM. Most often, HPE SimpliVity subsystem – the OmniStack Virtual Controller or Accelerator Card – on a node could fail without affecting the ability to run VMs on that node, in which case the applications running inside the VMs will experience no downtime.

Putting resiliency to the test

All of this data protection is built into the core functionality of the HPE SimpliVity Data Virtualization Platform and requires no configuration or additional licensing on the part of customers. It was designed from the beginning to be highly tolerant of failures. Prospects performing a proof of concept are often encouraged to put a full load on all nodes, then pull one disk in each node at the same time. Which disk doesn’t matter. This is because the use of RAID in each node means that the storage in each node is tolerant to disk failure.

Customers can then fail an entire node while the disks are still removed. VMs will go down, but – assuming the hypervisor is properly configured – they will restart on another node will full access to all their data. This is possible because the full storage for the VM is stored on a second node, and the hypervisor management can automatically fail the VM to a healthy node. This combination minimizes the outage experienced when a node fails.

The HPE SimpliVity hyperconverged platform has even built in features that can resurrect lost data when something truly disastrous occurs. More on that in a future post. Until then, the Data Protection on HPE SimpliVity Platforms white paper provides an even deeper view of the technology.

![picture2-1555105142615[1] picture2-1555105142615[1]](https://builddaylive.com/wp-content/uploads/2019/05/picture2-15551051426151-1024x255-394x330.png)